The Best Execution: Trader Or Client?

By

Professor Michael Mainelli and Mark Yeandle

Published by Fund AIM, Volume 1, Number 1, Investor Intelligence Partnership, pages 43-46.

Trading Is Theft?

There is an unresolved paradox at the heart of the current best execution debate. Regulators seek to protect clients who rely on investment firm trading skills, but regulators seek to protect clients by taking decisions out of traders’ hands. Current proposals for best execution seem to assume traders are unnecessary or to wish they didn’t exist.

The European Union’s Markets in Financial Instruments Directive (MiFID) Article 21 places an obligation on investment firms from 1 November 2007 to “execute orders on terms most favourable to the client” – ‘best execution’. Best execution should hardly be contentious. Yes, best execution is more than just price – it’s also timing, liquidity, cost, speed, settlement, confidentiality and likelihood of execution. Let investment firms take a number of parameters into account on behalf of their clients. Let investment firms use their judgement. Make sure that consistently poor order execution is punished.

But look more closely. There are two categories of proposals favoured by regulators, specifying ‘policies’ and ‘trade-by trade benchmarking’.

It’s My Policy And I’ll Outcry If I Want To

Policies are all fine and good to propose. There are three types (1) our policy is so vague it permits us to do business as we-always-have-done, always-have-foreseen, might-even-vaguely-think-about-doing, and haven’t-as-yet-dreamed-about-doing-but-might-want-to-after-a-good-night-out; (2) watch out a bit and use your judgement on this topic; (3) this is a detailed wiring diagram of how things work, follow it slavishly without any deviation whatsoever or we’ll have you.

OK, so policies aren’t all fine and good. Type (1) policies are meaningless, yet many firms still hope that they can get away with them. Regulators are increasingly alert to the dangers of more meaningless paper in our over-papered sector.

Type (3) policies are more dangerous for investment firms. The ideal here is a set of clear rules such as “a FTSE250 to FTSE 100 share is best traded on the LSE order book” or “a long gilt futures contract over £500,000 must be traded on LIFFE”. In some cases, the rules can be clear. But in many cases, in fact the cases when clients most need investment firms, there needs to be judgement – which venue is likely to provide the liquidity? when should trades be split among venues? which venues should be avoided because of possible adverse price movements?

If one could specify the rules, we could let computers do the trading from start to finish, but we can’t. It might be nice to have a completely clear set of rules, but it is impossible to state the rules. Moreover, if everyone were locked in to such a set of specific rules, it would be nearly impossible for new trading venues to get going, thus hindering competition enormously. Folks who favour Type (3) policies want to live in a deterministic world, not a world with markets.

So we’d like some Type (2) policies – “keep an eye on this issue and we’ll be checking up with you”. But then we have to turn to the second category of proposals, benchmarks.

On The Bench

The basic idea is that there is a huge, mother-of-all-factor-engines that tells a trader at any one time where the best execution is to be had. There are two types of benchmark (A) here are a few indicative facts about relevant trades; (B) the best combination of factors for this specific trade you are contemplating will be found on exchange X.

We already have (A); in fact that’s what traders do with the various information feeds and services their firms pay so much for. (B) is a bigger problem as regulators chase a dream. In February 2006 the Financial Services Authority (FSA) commissioned IBM Global Business Services to write ‘Options for Providing Best Execution in Dealer Markets’ (available online from May 2006). This paper examined and supported a proposal for a “benchmark modelling approach”. Having started by conceding that best execution means the best execution of a number of parameters, the study then just focused on price and concluded:

“We have given some consideration to whether there are viable alternatives to the benchmark modelling approach outlined here. Given the requirements to take “all reasonable steps to secure the best possible result”, and to demonstrate to clients, if required, that this has been done, we do not believe there are”.

There are significant problems with IBM’s support of the benchmark modelling approach, let alone their naïve focus on just price alone. First, as they conceded, the approach is easiest for liquid ‘vanilla’ products on an exchange, but isn’t a liquid exchange where benchmark prices are already confidently provided or even where order matching often occurs? Second, to build such a benchmark modelling engine, don’t we need to build the biggest of all factor engines containing all imaginable influences on price from the globe’s many exchanges, a titanic job when we are stretching systems already with current exchanges? Third, various information sources need to be weighted in importance when setting a multi-venue benchmark – but who is going to say that for this fixed income product at this point in time our confidence in Bloomberg’s price is 43%, Reuters’ 38% and Bob-n-Mike’s BondWatch 19%? IBM? the FSA? Fourth, don’t we need to predict possible price movements before recommending venues or combinations of venues, i.e. predict markets? Finally, IBM was stumped at how folks might use their own recommendation:

“How should the reference price be compared to the price of the trade? The quoted price may be: Limited to being no greater than a set number of basis points from a benchmark bid/offer − In this case, the actual quotes must be within these bounds; At a fixed number of basis points from a midprice or from a benchmark bid/offer. These models are unlikely to be favoured by the industry”.

How can an arbitrary and fixed number of basis points be used to judge execution quality in volatile markets? Again, the benchmark modelling approach seems to want to live in a world where traders are unnecessary – let the machine pick the best benchmark price. The financial services industry reaction to the benchmark modelling approach was negative and the FSA moved away from the benchmark modelling approach in its October paper CP06/19: COB Reform – 16.46 “In light of industry responses to our benchmarking proposals, we are minded not to propose any guidance with respect to benchmarking. We do, however, continue to think that benchmarking is a valid approach to compliance with MiFID’s best execution requirements in some circumstances.”

Trading Oversight

So we live in a world where we should recognise that traders, like many other professional services, perform a valuable role for clients that is not wholly understood. Yet, let’s not be complacent. There are opportunities for traders to abuse their privileged position with clients. As with so many areas of society, we recognise that even professionals need oversight. However, that oversight should be proportionate to the risk and not so costly that the professional advice is impossible to obtain or to use.

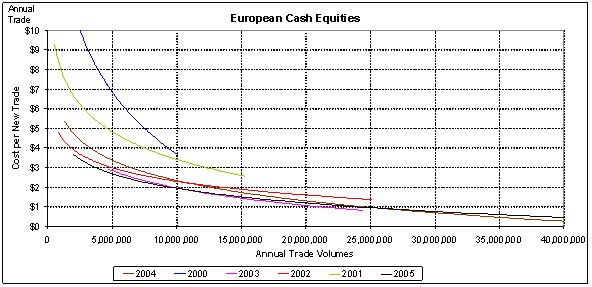

From Z/Yen’s industry-wide cost-per-trade benchmarking, we are conscious that there is significant cost pressure on participants that is often overlooked in best execution discussions.

The above graph, as just a sample from the many markets, shows radical cost-per-trade reductions over the past six years in European cash equities. It also shows increasing volumes, from a maximum of 10 million cash equity trades per annum by the largest market participant in 2000 to 40 million cash equity trades in 2005. With costs decreasing from several dollars per trade to less than one dollar, best execution requirements could significantly affect competition, perhaps even leading to further concentration and less innovation if they significantly increase cost-per-trade for smaller market participants.

Environmental Consistency Confidence

Currently most brokers rely on traditional management oversight which cannot cope with today’s volumes. Too much can be missed. A few firms take some samples of trades and examine them, but at today’s volumes finding poor trades is like trying to find the proverbial needle in a haystack. Z/Yen has discussed this problem with many clients over the past few years and there is a clear demand for help with the requirements of Article 21, ‘best execution’. What is missing from current processes and systems is the ability to for large volumes that each specific trade was executed at a reasonable price (or reasonable ‘other factor’) under the conditions prevailing at the time of the trade.

The only effective method of monitoring thousands of trades per week is to have an automated process identifying a reasonable set of anomalous trades for examination. For instance, volume-weighted average price (VWAP) is frequently cited but of little use in practice. VWAP variances vary markedly during the day and too many trades are identified as needing checking. Firms need a ‘sifting engine’ that identifies potentially anomalous trades, which are then checked. We call this method Environmental Consistency Confidence (ECC). Basically, firms should use automated processes to identify a (hopefully small) number of anomalous trades and then check what the traders were doing. Firms should also add a few randomly selected trades to the total to ensure completeness.

In 2004 Z/Yen undertook an informal trial of dynamic anomaly and prediction response (DAPR) software on bid-offer spreads for the small-cap trades of a broker. This trial indicated that the DAPR system might be good at identifying trading anomalies for compliance purposes within an ECC framework. A DAPR system can be based on numerous approaches, e.g. neural nets, Bayesian inference. Our research was based on ‘open-source’ Support Vector Machine mathematics that have the advantage of largely being non-parametric, i.e. they change and adapt to trading conditions without human input or adjustment.

Best Execution Compliance Automation

We then conducted a formal ECC feasibility study (the working name of the study was Best Execution Compliance Automation) using three months of London Stock Exchange trading data from four brokers comprising over 190,000 trades with a value of over £54bn in order to predict data for a fourth month. The objective was to see if DAPR software could predict a number of trade characteristics, in particular the likely price range of a trade (specifically, one of 20 price bands on a logarithmic scale). Two journal papers describing the study are available online:

- Michael Mainelli and Mark Yeandle, “Best Execution Compliance: New Techniques for Managing Compliance Risk”, Journal of Risk Finance, Volume 7, Number 3, pages 301-312, Emerald Group Publishing Limited (June 2006).

- Michael Mainelli and Mark Yeandle, “Best Execution Compliance: Towards An Equities Compliance Workstation”, Journal of Risk Finance, Volume 7, Number 3, pages 313-336, Emerald Group Publishing Limited (June 2006).

The project proved that DAPR could be used for successful ECC – the sifting engine (PropheZy) successfully predicted price movement bands and identified anomalies. Using these predictions, it was possible to set a level for best execution outliers or anomalies using price band prediction differences.

Implications and Summary

ECC is a viable way of identifying trading anomalies. Moreover, it is a low-cost technical approach (tens of thousands, not hundreds). The approach is of use to organisations in the capital markets, viz.:

- brokers: brokers can implement an ECC system that has already been built, both to reduce cost and attract business;

fund managers: fund managers can prove that they monitor broker performance and, quite naturally, focus that monitoring on unusual situations; - regulators: with some modifications and enhancements, e.g. taking advantage of the privileged central view some regulators enjoy, could apply ECC to market surveillance;

- exchanges: while brokers with large numbers of trades across different markets will probably need to implement on-site systems,

- exchanges have an opportunity to develop new revenue sources or improve services by providing ECC compliance systems and services to members, or to help members pool data to keep the number of ‘false positives’ low and thus ECC costs low (checking anomalies costs time and money).

| Approach | Set Policy | Trade Monitoring | Pre-Trade Benchmarking |

|---|---|---|---|

| Options |

detail of policy how often to review |

DAPR neural networks |

average enhanced with pricing models |

| Issues |

too proscriptive = algorithmic rigidity, where's the human input? too lose = no effect |

post trade degree of false positives |

enormous industry difficulties enormous technical difficulties where's the human input |

| Acceptability to Industry | high | medium | low |

| Acceptability to Regulators | low as proposed | under discussion | potentially high protection within complex environment |

| Ease and Cost | virtually nil | relatively inexpensive and gives competitive benefits |

enormous if achievable requires multiple players to act in concert |

We recognise the trade-offs between complete laissez-faire and some of the other heavy-handed approaches being considered for best execution, e.g. trade-by-trade benchmarking. We believe that there is a sensible middle ground, Environmental Consistency Confidence, and that it will benefit the industry to implement such sensible oversight of traders before regulators’ dreams accidentally eliminate traders’ ability to trade.

Professor Michael Mainelli, PhD FCCA FCMC MBCS CITP MSI, originally undertook aerospace and computing research, followed by seven years as a partner in a large international accountancy practice before a spell as Corporate Development Director of Europe’s largest R&D organisation, the UK’s Defence Evaluation and Research Agency, and becoming a director of Z/Yen (Michael_Mainelli@zyen.com). Michael was the British Computer Society’s Director of the Year for 2004/2005. Michael is Mercers’ School Memorial Professor of Commerce at Gresham College (www.gresham.ac.uk).

Mark Yeandle, MBA BA MCIM MBIM, originally worked in consumer goods marketing and held senior marketing positions at companies including Liberty and Mulberry. His experience includes launching new brands, company acquisitions & disposals and major change management programmes. Mark has been involved in many of Z/Yen’s recent research projects including a resourcing study, an anti-money laundering research project, and an evaluation of competitive stock exchange systems.

Z/Yen is a risk/reward management firm helping organisations make better choices. Z/Yen operates as a think-tank that implements strategy, finance, systems, marketing and intelligence projects in a wide variety of fields (www.zyen.com), such as developing an award-winning risk/reward prediction engine, helping a global charity win a good governance award or benchmarking transaction costs across global investment banks. Z/Yen’s humorous risk/reward management novel, “Clean Business Cuisine: Now and Z/Yen”, was published in 2000; it was a Sunday Times Book of the Week; Accountancy Age described it as “surprisingly funny considering it is written by a couple of accountants”.

Z/Yen Group Limited, 5-7 St Helen’s Place, London EC3A 6AU, United Kingdom; tel: +44 (0) 207-562-9562.